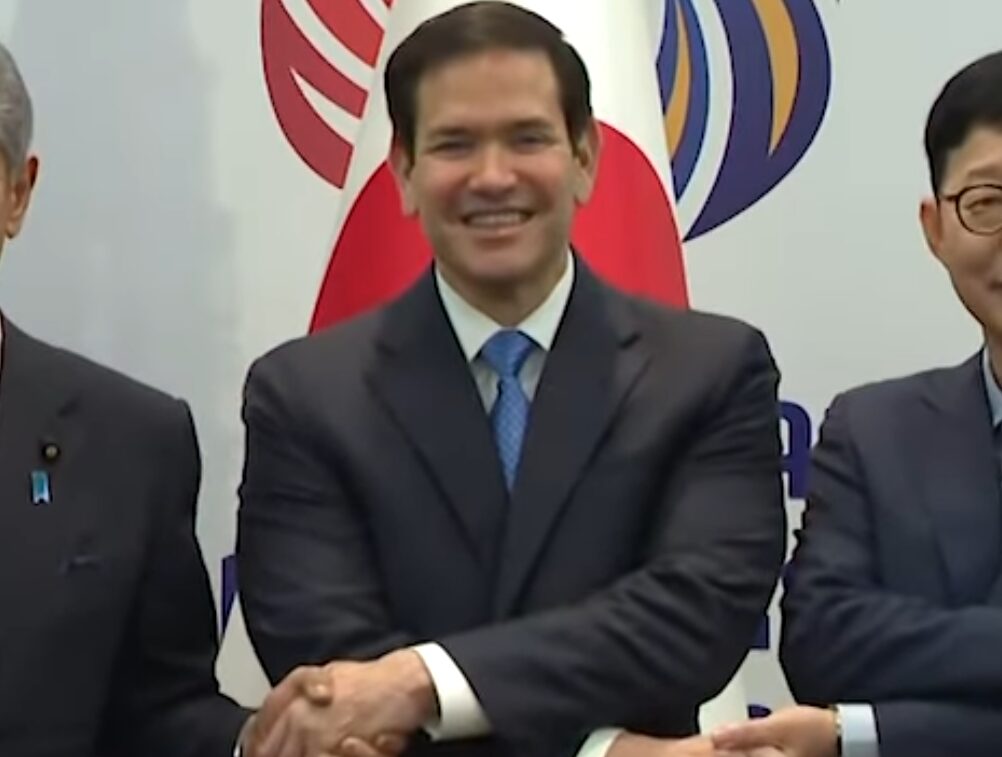

Fellow Americans, the age of deception has just escalated to dangerous and unprecedented heights. Imagine receiving a call from Secretary of State Marco Rubio himself—or so it appears—only to learn you’re speaking with an AI-generated impostor. This isn’t science fiction; it’s happening right now in our nation, and it’s a chilling wake-up call for all of us.

A disturbing series of attempts to mimic Secretary Rubio’s voice has sent shockwaves through the political and security communities. Scammers are leveraging AI technology to create eerily accurate voice deepfakes, contacting foreign ministers, U.S. lawmakers, and even governors in brazen bids to extract sensitive information. According to a report by the Washington Post, these impostors have gone so far as to utilize Signal accounts labeled “[email protected],” fooling even the most vigilant among us.

The incident involving Secretary Rubio isn’t an isolated event. The FBI sounded the alarm in May, warning of coordinated attempts to impersonate senior U.S. officials via AI-generated voice and text messages. A month later, Canadian cybersecurity authorities issued similar dire warnings, underscoring that this threat transcends borders. Sen. Tim Kaine (D-VA), despite often downplaying threats, openly admitted, “It’s a crazy new world out there. You gotta really worry about it.”

Rubio is not alone—earlier this year, someone impersonating Susie Wiles, White House Chief of Staff in the Trump administration, reached out to senators, governors, and business leaders using her compromised phone. Sen. Rick Scott (R-FL), who experienced the deception firsthand, described how convincing the scheme was: “It took me a second to realize it wasn’t her, but I figured it out.” Scott’s quick thinking prevented further harm, but others may not be so fortunate.

The rapid expansion of AI voice fraud is staggering. Vijay Balasubramaniyan, CEO of voice fraud prevention firm Pindrop, described a terrifying “1,300% explosion” in AI-driven scams over the past year alone. Balasubramaniyan warned, “With just a LinkedIn profile and a public audio clip, I can create a bot that speaks just like you.” Alarmingly, these AI impostors don’t merely replicate voices—they mimic emotions, empathy, and even casual conversational nuances, making them virtually indistinguishable from the real person.

Last year, thousands of New Hampshire voters received deceptive robocalls impersonating former President Joe Biden, urging them to skip voting. In another alarming case, a deepfake caller posing as a senior Ukrainian official attempted to extract sensitive election information from then-Senate Foreign Relations Chair Ben Cardin (D-MD). These are not minor annoyances; they are blatant attempts to undermine our democracy and national security.

The Federal Communications Commission has already taken some steps, unanimously ruling last year that AI-generated robocalls are illegal. While commendable, this regulation falls short of addressing targeted impersonation scams, which remain a clear and present danger. Sen. Mike Rounds (R-SD), a respected voice on the Intelligence panel, emphasized the urgency of enhancing AI detection capabilities: “We’ve got to have better AI for detection of other AI deepfakes. This is going to be an ongoing battle.”

It’s abundantly clear that current laws are inadequate. While President Trump proactively signed into law measures targeting AI-generated explicit content, more aggressive legislation is needed to confront targeted political impersonations head-on. Unfortunately, efforts to implement comprehensive federal regulations remain gridlocked, leaving everyday Americans vulnerable to increasingly sophisticated scams.

Last year alone, Americans lost more than $12.5 billion to fraud—a staggering 25% increase from the previous year, according to the Federal Trade Commission. This isn’t a partisan issue; it’s a national security imperative demanding immediate bipartisan action.

We must demand greater accountability from tech companies and communication platforms. Companies like Signal, WhatsApp, and social media giants must label and flag AI-generated content clearly, ensuring transparency and protecting users. Moreover, lawmakers must urgently prioritize additional legislation specifically targeting AI impersonation scams.

Our nation is under siege from invisible enemies wielding powerful new weapons of deception. The Rubio deepfake incident is a warning shot across the bow of our republic. If we fail to act decisively, we risk enabling fraudsters and foreign adversaries alike to exploit our vulnerabilities, deceive our citizens, and erode trust in our institutions. It’s time we take this threat seriously and respond with the urgency it demands.